Google has unveiled its latest AI model, Gemini 1.5, which features what the company calls an “experimental” one million token context window.

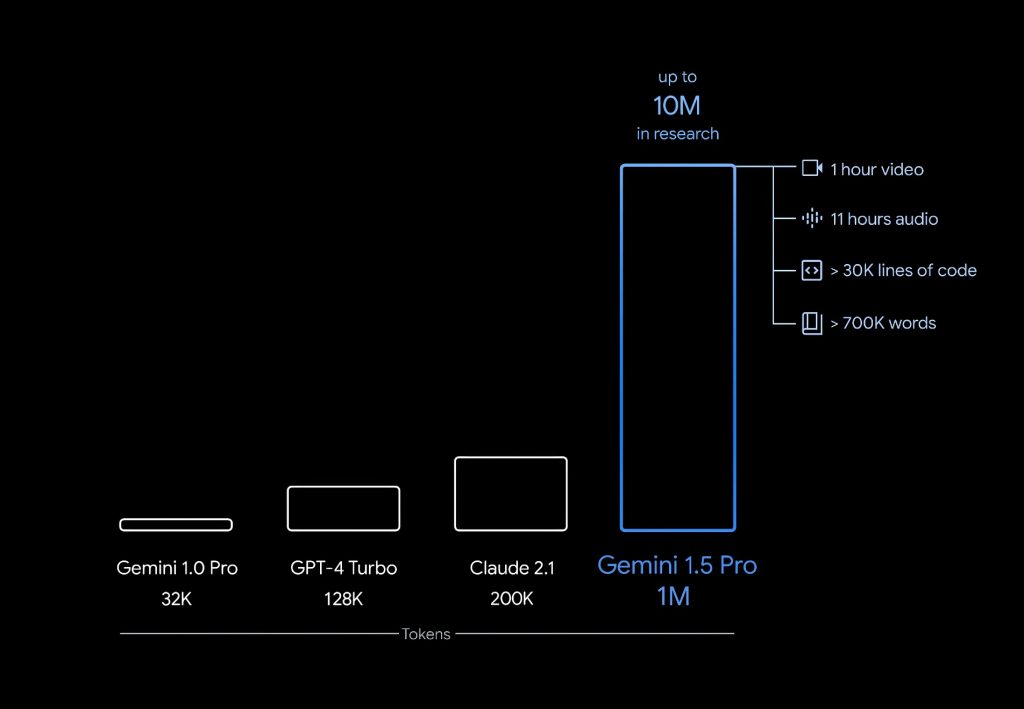

The new capability allows Gemini 1.5 to process extremely long text passages – up to one million characters – to understand context and meaning. This dwarfs previous AI systems like Claude 2.1 and GPT-4 Turbo, which max out at 200,000 and 128,000 tokens respectively:

“Gemini 1.5 Pro achieves near-perfect recall on long-context retrieval tasks across modalities, improves the state-of-the-art in long-document QA, long-video QA and long-context ASR, and matches or surpasses Gemini 1.0 Ultra’s state-of-the-art performance across a broad set of benchmarks,” said Google researchers in a technical paper (PDF).

The efficiency of Google’s latest model is attributed to its innovative Mixture-of-Experts (MoE) architecture.

“While a traditional Transformer functions as one large neural network, MoE models are divided into smaller ‘expert’ neural networks,” explained Demis Hassabis, CEO of Google DeepMind.

“Depending on the type of input given, MoE models learn to selectively activate only the most relevant expert pathways in its neural network. This specialisation massively enhances the model’s efficiency.”

To demonstrate the power of the 1M token context window, Google showed how Gemini 1.5 could ingest the entire 326,914-token Apollo 11 flight transcript and then accurately answer specific questions about it. It also summarised key details from a 684,000-token silent film when prompted.

Google is initially providing developers and enterprises free access to a limited Gemini 1.5 preview with a one million token context window. A 128,000 token general release for the public will come later, along with pricing details.

For now, the one million token capability remains experimental. But if it lives up to its early promise, Gemini 1.5 could set a new standard for AI’s ability to understand complex, real-world text.

Developers interested in testing Gemini 1.5 Pro can sign up in AI Studio. Google says that enterprise customers can reach out to their Vertex AI account team.

(Image Credit: Google)

See also: Amazon trains 980M parameter LLM with ’emergent abilities’

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London. The comprehensive event is co-located with other leading events including BlockX, Digital Transformation Week, and Cyber Security & Cloud Expo.

Explore other upcoming enterprise technology events and webinars powered by TechForge here.