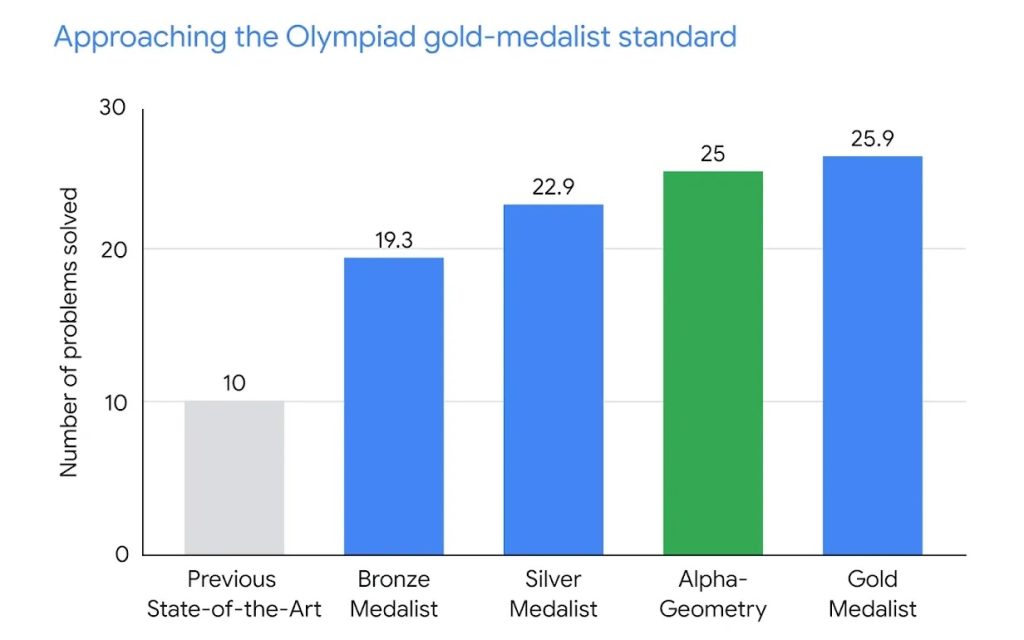

DeepMind, the UK-based AI lab owned by Google’s parent company Alphabet, has developed an AI system called AlphaGeometry that can solve complex geometry problems close to human Olympiad gold medalists.

In a new paper in Nature, DeepMind revealed that AlphaGeometry was able to solve 25 out of 30 benchmark geometry problems from past International Mathematical Olympiad (IMO) competitions within the standard time limits. This nearly matches the average score of 26 problems solved by human gold medalists on the same tests.

The AI system combines a neural language model with a rule-bound deduction engine, providing a synergy that enables the system to find solutions to complex geometry theorems.

AlphaGeometry took a revolutionary approach to synthetic data generation by creating one billion random diagrams of geometric objects and deriving relationships between points and lines in each diagram. This process – termed “symbolic deduction and traceback” – resulted in a final training dataset of 100 million unique examples, providing a rich source for training the AI system.

According to DeepMind, AlphaGeometry represents a breakthrough in mathematical reasoning for AI, bringing it closer to the level of human mathematicians. Developing these skills is seen as essential for advancing artificial general intelligence.

Evan Chen, a maths coach and former Olympiad gold medalist, evaluated a sample of AlphaGeometry’s solutions. He said its output was not just correct, but also clean, human-readable proofs using standard geometry techniques—unlike the messy numerical solutions often produced when AI systems brute force maths problems.

While AlphaGeometry only handles the geometry portions of Olympiad tests so far, its skills alone would have been enough to earn a bronze medal on some past exams. DeepMind hopes to continue improving its maths reasoning abilities to the point it could pass the entire multi-subject Olympiad.

Advancing AI’s understanding of mathematics and logic is a key goal for DeepMind and Google. The researchers believe mastering Olympiad problems brings them one step closer towards more generalised artificial intelligence that can automatically discover new knowledge.

(Photo by Dustin Humes on Unsplash)

See also: Stability AI releases Stable Code 3B for enhanced coding assistance

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London. The comprehensive event is co-located with Digital Transformation Week and Cyber Security & Cloud Expo.

Explore other upcoming enterprise technology events and webinars powered by TechForge here.