According to a new paper, AI-generated faces have become so advanced that humans now cannot distinguish between real and fake more often than not.

“Our evaluation of the photorealism of AI-synthesized faces indicates that synthesis engines have passed through the uncanny valley and are capable of creating faces that are indistinguishable—and more trustworthy—than real faces,” the researchers explained.

The researchers – Sophie J. Nightingale, Department of Psychology, Lancaster University, and Hanry Farid, Department of Electrical Engineering and Computer Sciences, University of California – highlight the worrying trend of “deepfakes” being weaponised.

Video, audio, text, and imagery generated by generative adversarial networks (GANs) are increasingly being used for nonconsensual intimate imagery, financial fraud, and disinformation campaigns.

GANs work by pitting two neural networks – a generator and a discriminator – against each other. The generator will start with random pixels and will keep improving the image to avoid penalisation from the discriminator. This process continues until the discriminator can no longer distinguish a synthesised face from a real one.

Just as the discriminator could no longer distinguish a synthesised face from a real one, neither could human participants. In the study, the human participants identified fake images just 48.2 percent of the time.

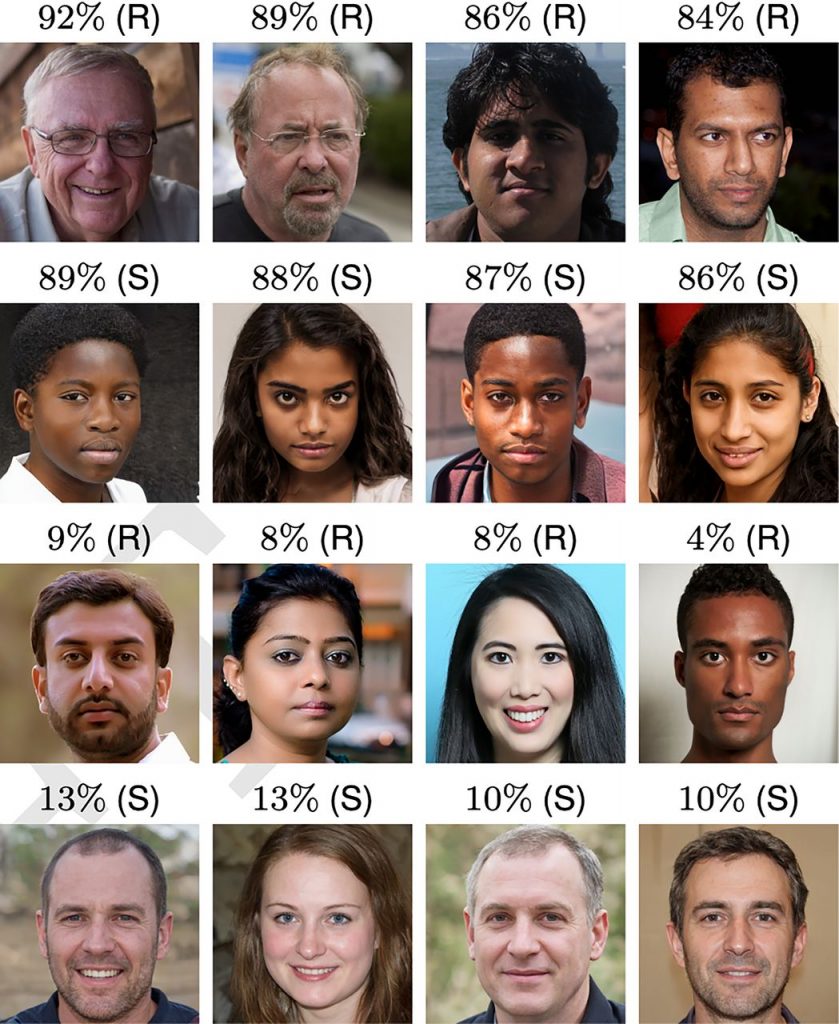

Accuracy was found to be higher for correctly identifying real East Asian and White male faces than females. However, for both male and female synthetic faces, White faces were least accurately identified and White males less than White females.

The researchers hypothesised that “White faces are more difficult to classify because they are overrepresented in the StyleGAN2 training dataset and are therefore more realistic.”

Here are the most (top and upper-middle lines) and least (bottom and lower-middle) accurately classified real (R) and synthetic (S) faces:

There’s a glimmer of hope for humans with participants being able to distinguish real faces 59 percent of the time after being given training on how to spot fakes. That’s not a particularly comfortable percentage, but it at least tips the scales towards humans spotting fakes more often than not.

What sets the alarm bells ringing again is that synthetic faces were rated more “trustworthy” than real ones. On a scale of 1 (very untrustworthy) to 7 (very trustworthy), the average rating for real faces (blue bars) of 4.48 is less than the rating of 4.82 for synthetic.

“A smiling face is more likely to be rated as trustworthy, but 65.5 per cent of our real faces and 58.8 per cent of synthetic faces are smiling, so facial expression alone cannot explain why synthetic faces are rated as more trustworthy,” wrote the researchers.

The results of the paper show the importance of developing tools that can spot the increasingly small differences that distinguish the real from synthetic because humans will struggle even if everyone was specifically trained.

With Western intelligence agencies calling out fake content allegedly from Russian authorities to justify an invasion of Ukraine, the increasing ease in which such media can be generated in mass poses a serious threat that’s no longer the work of fiction.

(Photo by NeONBRAND on Unsplash)

Related: James Cameron warns of the dangers of deepfakes

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo. The next events in the series will be held in Santa Clara on 11-12 May 2022, Amsterdam on 20-21 September 2022, and London on 1-2 December 2022.

Explore other upcoming enterprise technology events and webinars powered by TechForge here.