OpenAI is making it easy for developers to “fine-tune” GPT-3, enabling custom models for their applications.

The company says that existing datasets of “virtually any shape and size” can be used for custom models.

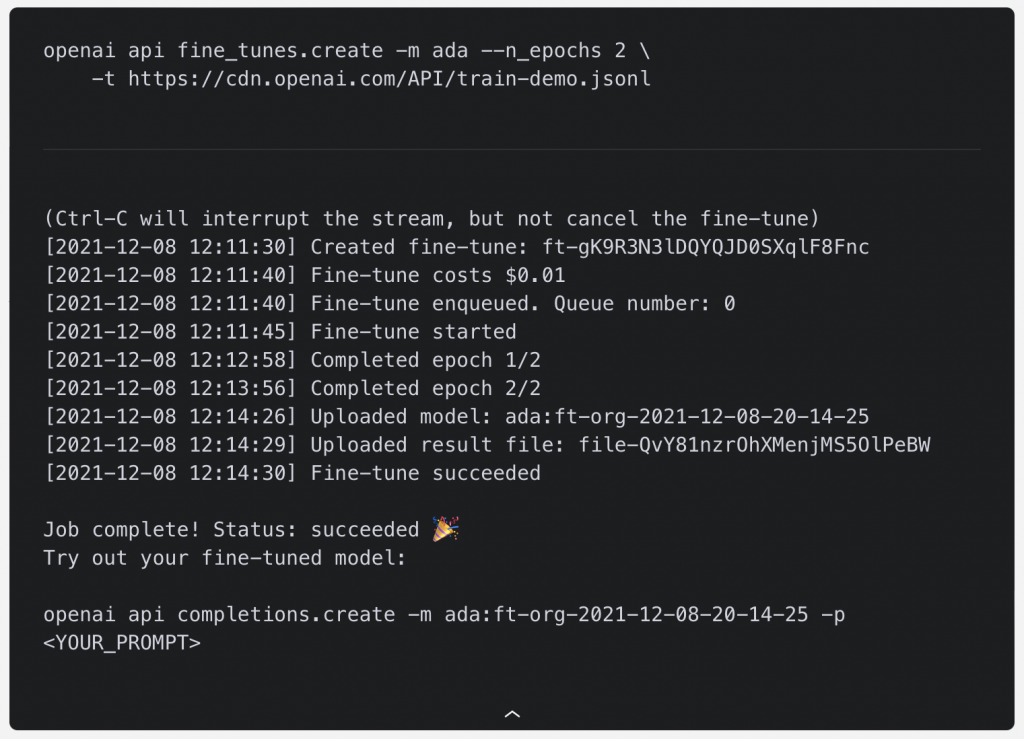

A single command in the OpenAI command-line tool, alongside a user-provided file, is all that it takes to begin training. The custom GPT-3 model will then be available for use in OpenAI’s API immediately.

One customer says that it was able to increase correct outputs from 83 percent to 95 percent through fine-tuning. Another client reduced error rates by 50 percent.

Andreas Stuhlmüller, Co-Founder of Elicit, said:

“Since we started integrating fine-tuning into Elicit, for tasks with 500+ training examples, we’ve found that fine-tuning usually results in better speed and quality at a lower cost than few-shot learning.

This has been essential for making Elicit responsive at the same time as increasing its accuracy at summarising complex research statements.

As far as we can tell, this wouldn’t have been doable without fine-tuning GPT-3”

Joel Hellermark, CEO of Sana Labs, commented:

“With OpenAI’s customised models, fine-tuned on our data, Sana’s question and content generation went from grammatically correct but general responses to highly accurate semantic outputs which are relevant to the key learnings.

This yielded a 60 percent improvement when compared to non-custom models, enabling fundamentally more personalised and effective experiences for our learners.”

In June, Gartner said that 80 percent of technology products and services will be built by those who are not technology professionals by 2024. OpenAI is enabling custom AI models to be easily created to unlock the full potential of such products and services.

Related: OpenAI removes GPT-3 API waitlist and opens applications for all developers

(Photo by Sigmund on Unsplash)

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo. The next events in the series will be held in Santa Clara on 11-12 May 2022, Amsterdam on 20-21 September 2022, and London on 1-2 December 2022.